How to audit data modifications with surgical precision

The problem 🔗︎

This time a colleague from work asked how to best find a culprit that has been changing a specific cell in a table. It could be an automated process, application logic, application user or even an ad-hoc statement - we didn’t know. The table has many different access patterns, some of which are frequent. Ideally, we don’t want to monitor everything and sift through it.

We wanted to learn the who, the how and then ask why? If you like to know the whole journey, read on. Otherwise, you can skip to section Eureka moment

Possible solutions 🔗︎

From the top of my head, I thought of these options:

- SQL Audit

- Data mine the Query Store to find the possible access patterns

- Extended Events (XE) - but what should I filter?

- Trigger on the table + logging table

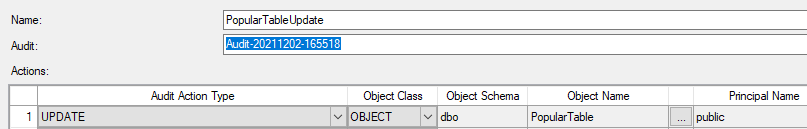

1. SQL Audit 🔗︎

I admit I haven’t used Audit before (I just knew of its existence), so it was an opportunity to try it out. You need to:

- Set up a Server Audit Specification to choose the target file.

- Set up a Database Audit Specification.

- Select Audit Action Type, Object and Principal, and we are good to go.

On the positive side, it captures who and how.

On the negative side, it captures everything. If I have tens of thousands of modifications per hour, I have to collect everything and look for the culprit.

This wouldn’t do.

2. Data mine the Query Store 🔗︎

Query Store has aggregated data, so I knew I won’t be getting any specific query execution. I was here to map out all the access patterns and perhaps pass the signed query hash to Extended Events.

I didn’t have a sophisticated method, just a full-text search on sys.query_store_query_text.

I was looking for the table name along with the MERGE or UPDATE keywords.

But there were just too many hits - it would get me maybe closer, but I still wouldn’t know specifics.

3. Extended Events 🔗︎

At this point, there was still nothing to filter. I could grab all the query hashes I’ve got from mining the Query Store, but the update could come from a different query next time. I could collect everything and filter, but SQL Audit would do a better job.

4. Trigger 🔗︎

Triggers are often misused and get a bad reputation, but this is a perfectly fine use case.

All I need is an AFTER UPDATE Trigger and a logging table - easy peasy.

While the Trigger fires on each UPDATE, I’ll have logic inside to only log information on certain conditions.

I can use the HOST_NAME(), ORIGINAL_LOGIN() and other functions to capture the who info.

What I was missing was how? Was it an ad-hoc statement, Stored Procedure or something else? I wanted to capture the input buffer. I thought there might be a function for that but couldn’t find it.

All I needed was to query the DMVs. But that requires a VIEW SERVER STATE permission.

That invalidates the Trigger, so I need to use the Module Signing.

That means creating a certificate, creating a Login, etc. Which felt like a lot of work for a simple auditing task.

Especially, if you do that infrequently. Or when you are new to Module Signing.

It’s a shame! I already had the who figured out. I was halfway there!

Eureka moment 🔗︎

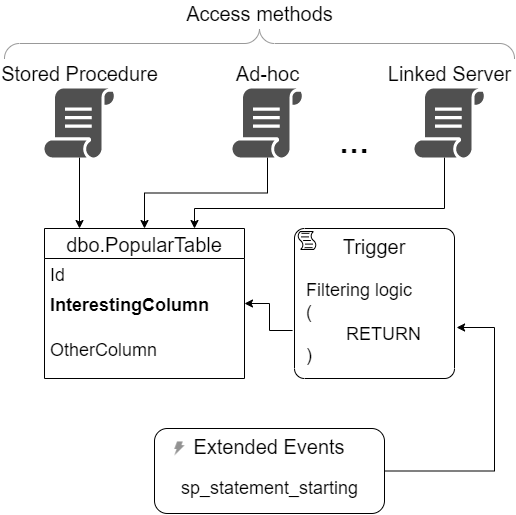

Why do I have to use just one tool to finish the job? I wanted to combine the Query Store hash information with the Extended Events. I can instead combine it with the Trigger!

Within the Trigger, I have logic to filter the exact changes I’m interested in.

And with the Extended Events, I can monitor only the statement in my Trigger logic and grab all the other auditing info - especially the input buffer and the tsql stack.

Here’s a diagram:

Finally, I had both the who and how!

The proof 🔗︎

Let’s create a table and some data.

DROP TABLE IF EXISTS dbo.PopularTable

CREATE TABLE dbo.PopularTable

(

Id int PRIMARY KEY

, InterestingColumn int NOT NULL /* Monitor this column */

, OtherColumn int NOT NULL

)

INSERT INTO dbo.PopularTable

(Id, InterestingColumn, OtherColumn)

VALUES

(1, 0, 0)

, (2, 0, 0) /* Monitor this row */

, (3, 0, 0)

Next, I’ll create an AFTER UPDATE Trigger on this table. Inside I’ll check just updates to row with an ID = 2 and InterestingColumn.

Additionally, I only want to alert when the value changes.

CREATE OR ALTER TRIGGER dbo.PopularTableUpdateInterestingColumn ON dbo.PopularTable

AFTER UPDATE

AS

BEGIN

IF EXISTS

(

SELECT InterestingColumn FROM Inserted WHERE Id = 2

EXCEPT

SELECT InterestingColumn FROM Deleted WHERE Id = 2

)

BEGIN

RETURN

END

END

GO

/* Set the trigger order to 'Last' for a good measure */

exec sp_settriggerorder

@triggername = 'dbo.PopularTableUpdateInterestingColumn'

, @order='Last'

, @stmttype = 'UPDATE'

Apart from the check, the Trigger doesn’t do anything.

We will set up an Extended Events session that will watch the execution of this Trigger but only the RETURN statement inside the IF - which has a query_hash_signed = 0.

CREATE EVENT SESSION [MonitorPopularTableTrigger] ON SERVER

ADD EVENT sqlserver.sp_statement_starting

(

SET

collect_object_name = 1

ACTION

(

server_instance_name

, server_principal_name

, client_app_name

, client_hostname

, client_pid

, session_id

, sql_text

, tsql_stack

, query_hash_signed

)

WHERE

[object_name] = N'PopularTableUpdateInterestingColumn'

AND query_hash_signed = 0

)

For your use case, you’ll want to add a file event target as well as tweak the collected actions.

In this case, Watch Live Data will do just fine.

With all the pieces arranged all that is left is some access code and test scenarios.

I’ll create a stored procedure to test the first few test cases and then use ad-hoc UPDATE and MERGE statements.

CREATE OR ALTER PROCEDURE dbo.UpdatePopularTable

(

@Id int

, @InterestingColumn int

, @OtherColumn int

)

AS

BEGIN

UPDATE dbo.PopularTable

SET

InterestingColumn = @InterestingColumn

, PopularTable.OtherColumn = @OtherColumn

WHERE Id = @Id

END

Let’s call each test in separate batches, so our monitoring sql_text is clean. Don’t forget we are only interested in row 2 InterestingColumn change.

Test case 1 🔗︎

- Change via Stored Procedure

- Update InterestingColumn

- Update row 1

- Nothing should show up in XE

/* Test 1 */

EXEC dbo.UpdatePopularTable

@Id = 1

, @InterestingColumn = 1

, @OtherColumn = 0

Test case 2 🔗︎

- Change via Stored Procedure

- Update OtherColumn

- Update row 2

- Nothing should show up in XE

/* Test 2 */

EXEC dbo.UpdatePopularTable

@Id = 2

, @InterestingColumn = 0

, @OtherColumn = 1

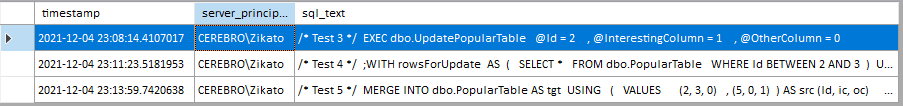

Test case 3 🔗︎

- Change via Stored Procedure

- Update InterestingColumn

- Update row 2

- There should be a captured event

/* Test 3 */

EXEC dbo.UpdatePopularTable

@Id = 2

, @InterestingColumn = 1

, @OtherColumn = 0

Test case 4 🔗︎

- Change via ad-hoc

UPDATEstatement - Update InterestingColumn

- Update multiple rows including the row 2

- There should be a captured event

/* Test 4 */

;WITH rowsForUpdate

AS

(

SELECT *

FROM dbo.PopularTable

WHERE Id BETWEEN 2 AND 3

)

UPDATE rowsForUpdate

SET

InterestingColumn = 2

Test case 5 🔗︎

- Change via ad-hoc

MERGEstatement - Update InterestingColumn

- Update row 2

- Insert additional row - to justify

MERGEoverUPDATE - There should be a captured event

/* Test 5 */

MERGE INTO dbo.PopularTable AS tgt

USING

(

VALUES

(2, 3, 0)

, (5, 0, 1)

) AS src (Id, ic, oc)

ON tgt.Id = src.Id

WHEN MATCHED THEN

UPDATE SET tgt.InterestingColumn = src.ic

WHEN NOT MATCHED THEN

INSERT (Id, InterestingColumn, OtherColumn)

VALUES

(src.Id, src.ic, src.oc);

Results 🔗︎

As expected - the first two tests resulted in no event captured. The other three are there with their respective sql_text.

Warning! 🔗︎

There is one risk I’ve ran into in the past and that’s adding a trigger could break an “unrelated” code.

Error 334 says:

Let’s see this in action

UPDATE dbo.PopularTable

SET PopularTable.InterestingColumn = 1

OUTPUT Deleted.*

WHERE PopularTable.Id = 2

This gives us the error above. The fix is to output into a table variable and then read from it

DECLARE @outputTable AS TABLE

(

Id int NOT NULL

, InterestingColumn int NOT NULL

, OtherColumn int NOT NULL

)

UPDATE dbo.PopularTable

SET PopularTable.InterestingColumn = 1

OUTPUT Deleted.* INTO @outputTable

WHERE PopularTable.Id = 2

SELECT

*

FROM @outputTable AS ot

Personally, I think removing the “naked” output is the way to go, because there are more valid cases for adding triggers.

Get alerted on new events 🔗︎

Now, this is well beyond the scope of this article, but you can use dbatools Powershell module to automate alerting. You can tweak this snippet to add a smart target to our existing XE session, and you’ll get an email notification on the next event. Caveat: this keeps an open connection to the server which listens to the XE session.

$params = @{

SmtpServer = "YourSmtp"

To = "[email protected]"

Sender = "[email protected]"

Subject = "PopularTable Trigger fired on {server_instance_name}"

Body = "

Timestamp: {collection_time}

Client Application name: {client_app_name}

Hostname: {client_hostname}

Login {server_principal_name}

Text: {sql_text}

Stack: {tsql_stack}

"

}

$emailresponse = New-DbaXESmartEmail @params

Start-DbaXESmartTarget -SqlInstance 'YourInstance' -Session 'MonitorPopularTableTrigger' -Responder $emailresponse

# Clean up all smart targets with Get-DbaXESmartTarget | Remove-DbaXESmartTarget